A Triple-step Asynchronous Federated Learning Mechanism for Client Activation, Interaction Optimization, and Aggregation Enhancement

Published in IEEE Internet of Things Journal, 2022

Recommended citation: L. You, S. Liu, Y. Chang and C. Yuen, "A Triple-Step Asynchronous Federated Learning Mechanism for Client Activation, Interaction Optimization, and Aggregation Enhancement," IEEE Internet of Things Journal, vol. 9, no. 23, pp. 24199-24211, Dec 2022, doi: 10.1109/JIOT.2022.3188556. https://ieeexplore.ieee.org/abstract/document/9815310

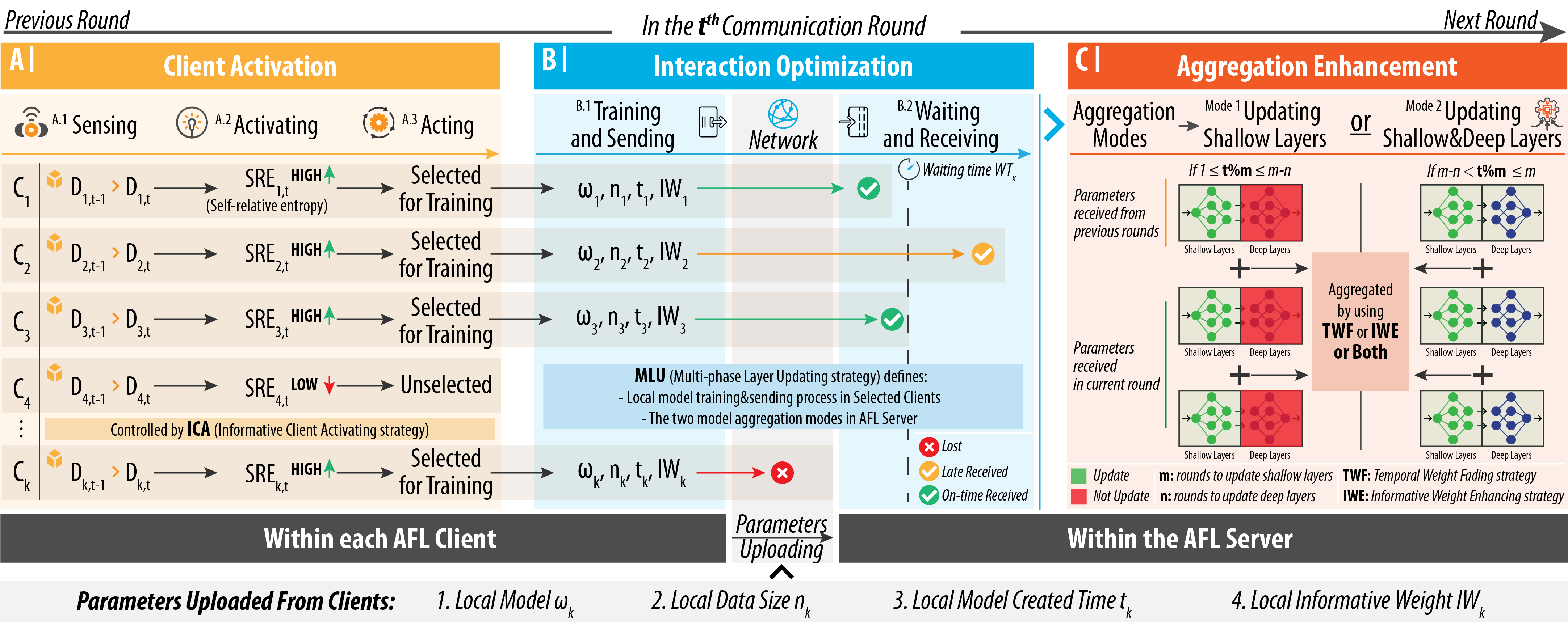

Abstract: Federated Learning in asynchronous mode (AFL) is attracting much attention from both industry and academia to build intelligent cores for various Internet of Things (IoT) systems and services by harnessing sensitive data and idle computing resources dispersed at massive IoT devices in a privacy-preserving and interaction-unblocking manner. Since AFL is still in its infancy, it encounters three challenges that need to be resolved jointly, namely: 1) how to rationally utilize AFL clients, whose local data grow gradually, to avoid overlearning issues; 2) how to properly manage the client-server interaction with both communication cost reduced and model performance improved; and finally, 3) how to effectively and efficiently aggregate heterogeneous parameters received at the server to build a global model. To fill the gap, this article proposes a triple-step asynchronous federated learning mechanism (TrisaFed), which can: 1) activate clients with rich information according to an informative client activating strategy (ICA); 2) optimize the client–server interaction by a multiphase layer updating strategy (MLU); and 3) enhance the model aggregation function by a temporal weight fading strategy (TWF), and an informative weight enhancing strategy (IWE). Moreover, based on four standard data sets, TrisaFed is evaluated. As shown by the result, compared with four state-of-the-art baselines, TrisaFed can not only dramatically reduce the communication cost by over 80% but also can significantly improve the learning performance in terms of model accuracy and training speed by over 8% and 70%, respectively.

Keywords: Aggregation Enhancement, Asynchronous Federated Learning (AFL), Client Activation, Federated Learning (FL), Interaction Optimization